Back to Labs Content

- Backend

- API

- System Design

Understanding Rate Limiting: A Guide to Staying in Control of Your APIs

Tuesday, December 10, 2024 at 12:37:01 PM GMT+8

What Is Rate Limiting?

At its core, rate limiting is a control mechanism used in software systems, especially APIs, to restrict how many requests a client can make within a specific timeframe.

Think of it as setting the speed limit on a highway. Without it, cars (or requests) might flood the lanes, causing congestion (or a system crash). Rate limiting ensures everyone gets to their destination (or data) without overwhelming the system.

Why Does Rate Limiting Matter?

Imagine running an online service where thousands (or even millions) of users access your API. What happens if one rogue user floods your system with excessive requests?

Without rate limiting, here’s what you might face:

1.Server Overload: Your system might slow down or crash entirely.

2.Unhappy Users: Other users won’t get timely responses, leading to frustration.

3.Increased Costs: Handling unnecessary requests eats up resources.

4.Security Risks: It’s an open invitation for DDoS (Distributed Denial of Service) attacks.

How Does Rate Limiting Work?

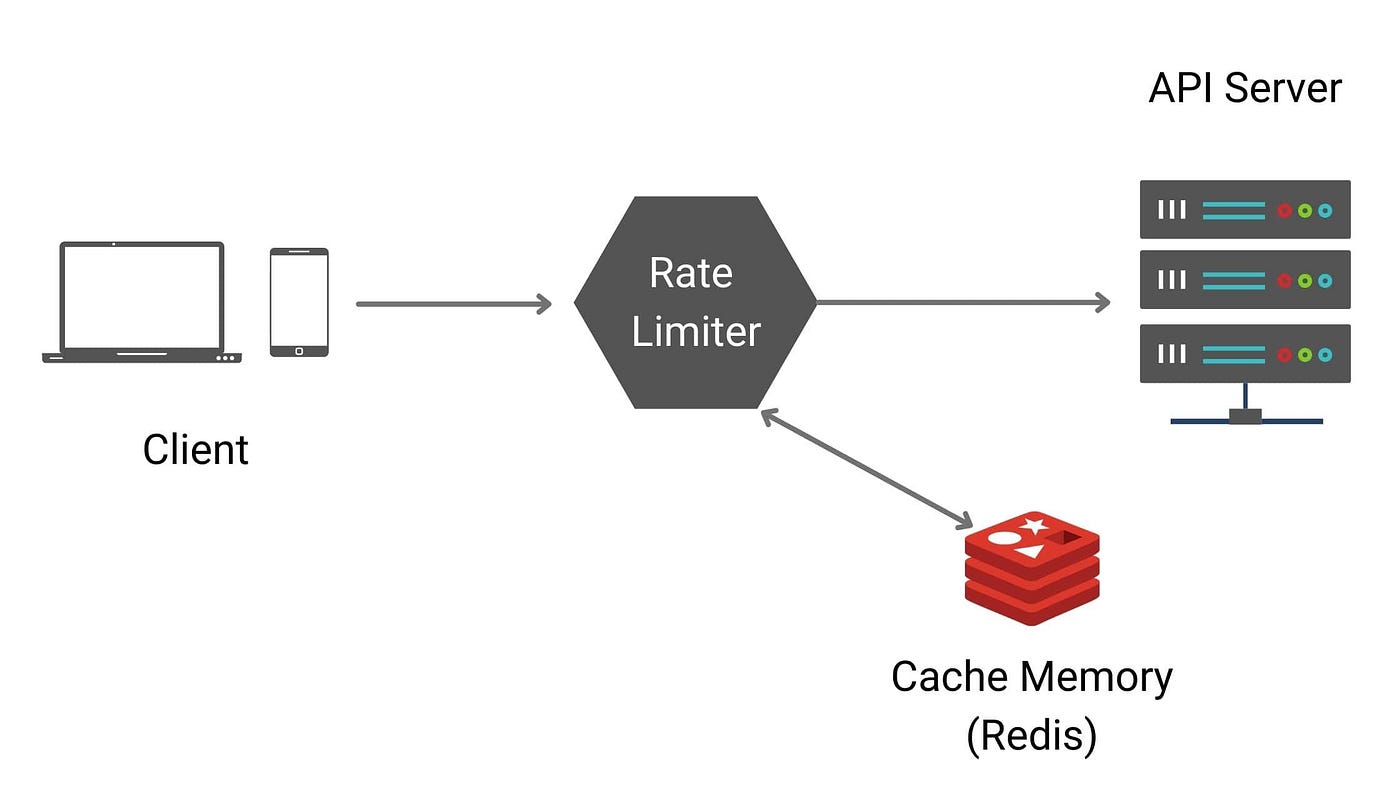

1. The Client Sends a Request

Let’s start with the clients—your users. They might be requesting to fetch data, submit a form, or interact with your app in some way. Every action sends a request to your API server.

Now, without a system in place, too many requests from too many clients could crush the API. This is where the rate limiter steps in.

2. The Rate Limiter Checks the Gate

The rate limiter is your vigilant bouncer. Each incoming request is checked against a set of rules. For example:

- Rule: No more than 10 requests per second per client.

- Rule: A maximum of 1,000 requests per day for premium users.

If a request fits within the rules, it gets a thumbs-up. If not, the rate limiter steps in with a polite "Sorry, you've reached your limit" (a.k.a., the 429 Too Many Requests error).

3. Redis: The Silent Helper

Now, how does the rate limiter keep track of all this? Enter Redis, the speedy memory store.

Redis is like a super-efficient notebook that logs each client’s request count. Here’s how it works:

When a request comes in, Redis:

-Checks how many requests the client has already made.

-Updates the tally in real-time.

Redis’s speed and scalability make it perfect for handling this kind of workload.

4. Forwarding to the API Server

If the request passes the rate limiter’s scrutiny, it’s sent to the API server.

The server processes the request, performs the required action (like retrieving data or updating a record), and sends the response back to the client.

Common Rate Limiting Strategies

Here are some popular methods to implement rate limiting:

1. Fixed Window Algorithm

Think of it as a time bucket. If you allow 100 requests per minute, the count resets every minute.

Example:

If a user sends 99 requests in the last second of a window and 100 in the next second, they technically make 199 requests within two seconds. (Uh-oh!)

2. Sliding Window Algorithm

This method smooths things out by tracking requests over a rolling time window. It’s like always looking back 60 seconds from the current moment to count requests.

3. Token Bucket

Imagine each user has a bucket filled with tokens. Each request consumes a token. If the bucket is empty, no more requests are processed until it refills.

4. Leaky Bucket

This works like a dripping faucet. Even if the user sends requests in bursts, the system processes them at a consistent rate.

Where Is Rate Limiting Used?

Rate limiting isn’t just for APIs—it’s everywhere!

1. Social Media Platforms: To prevent spamming or abuse (e.g., limiting tweets per minute).

2. E-Commerce Sites: To stop bots from sniping deals during flash sales.

3. Gaming Servers: To ensure fair play and prevent server overloads.

4. Banking APIs: To protect sensitive systems from fraud or misuse.

Why Rate Limiting is Essential

Rate limiting isn’t just about saying “no.” It’s about balance.

Here’s what it brings to the table:

1. Fair Access: Every client gets a fair chance to use the API without hogging resources.

2. Protection: Prevents accidental overloads or deliberate attacks (like DDoS) from crashing the system.

3. Cost Efficiency: By controlling traffic, you reduce server strain and save on infrastructure costs.

The Big Picture

With rate limiting, APIs can breathe easy, knowing that they’re protected from chaos while serving users efficiently. It’s not just a technical tool—it’s a safeguard for smooth operations.

So, next time you’re designing an API or interacting with one, remember: there’s a silent hero ensuring everything runs seamlessly. Whether it’s Redis handling the count or the rate limiter enforcing rules, this system is your API’s best friend.

Another Recommended Labs Content

Understanding Database Partitioning vs Sharding: Concepts, Benefits, and Challenges

When dealing with large volumes of data, efficient database management becomes essential. Two widely used techniques to improve performance and scalability are database partitioning and database sharding. Although often confused, these approaches differ fundamentally in architecture, complexity, and suitable use cases. This article explores these differences in detail, helping you decide which fits your application best.

System Design Simplified: The Trade-Off Triangle You Must Master

Behind every well-architected system is a set of tough decisions. The CAP Theorem simplifies those decisions by showing you what you must give up to keep your system fast, correct, and resilient. Learn how to apply this in real-world architecture.

Why Domain-Driven Design (DDD) Matters: From Chaos to Clarity in Complex Systems

Domain-Driven Design (DDD) is a powerful approach to software development that places the business domain—not the technology—at the center of your design decisions. First introduced by Eric Evans, DDD is essential for developers and architects who want to build systems that reflect real-world complexity and change.